Building agents with LLM (large language model) as its core controller is a cool concept. Several proof-of-concepts demos, such as AutoGPT, GPT-Engineer and BabyAGI, serve as inspiring examples. The potentiality of LLM extends beyond generating well-written copies, stories, essays and programs; it can be framed as a powerful general problem solver.

使用LLM(大型语言模型)作为核心控制器来构建代理是一个酷的概念。诸如 AutoGPT、GPT-Engineer 和 BabyAGI 等几个概念验证演示提供了启发性的例子。LLM的潜力远不止于生成优秀的文章、故事、论文和程序;它可以被构想为一个强大的通用问题解决者。

Agent System Overview 代理系统概述

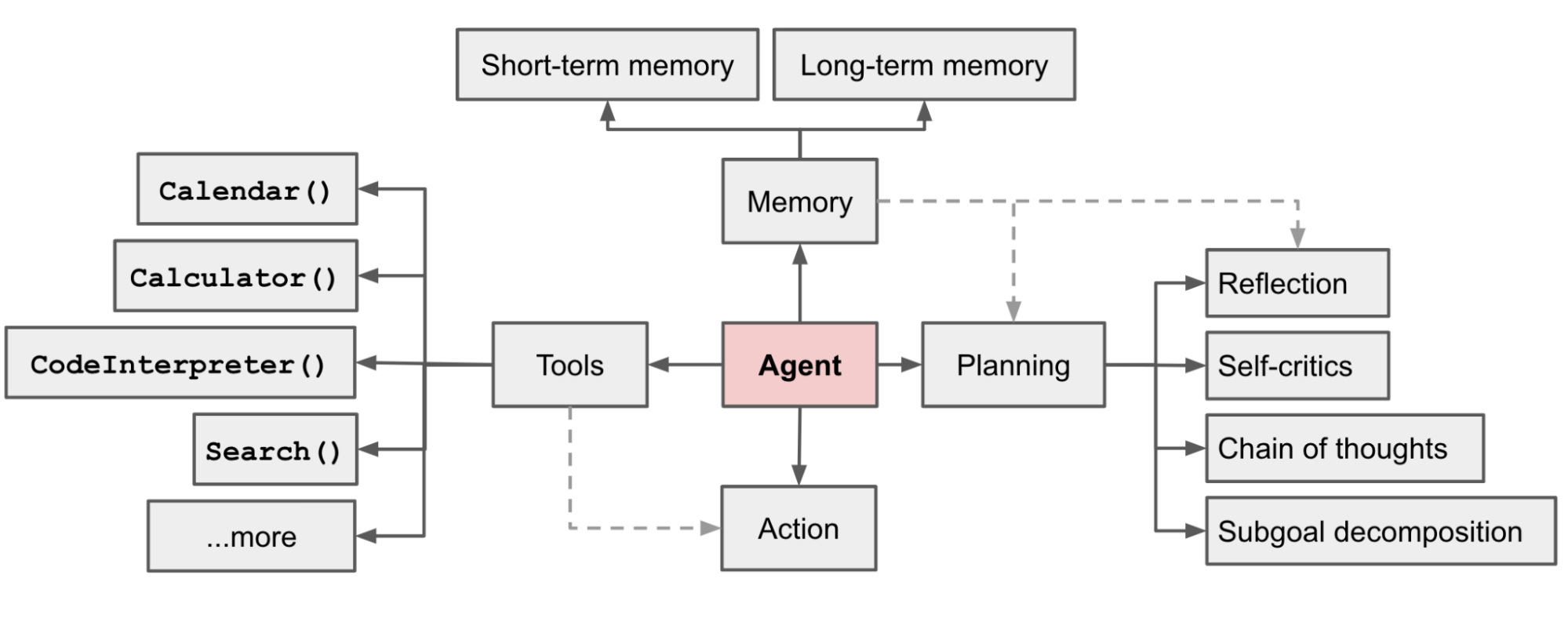

In a LLM-powered autonomous agent system, LLM functions as the agent’s brain, complemented by several key components:

在LLM驱动的自主代理系统中,LLM充当代理的大脑,辅以几个关键组件:

- Planning 规划

- Subgoal and decomposition: The agent breaks down large tasks into smaller, manageable subgoals, enabling efficient handling of complex tasks.

子目标和分解:代理将大任务分解为更小、更易于管理的子目标,从而有效地处理复杂任务。 - Reflection and refinement: The agent can do self-criticism and self-reflection over past actions, learn from mistakes and refine them for future steps, thereby improving the quality of final results.

反思与改进:代理可以对过去的行动进行自我批评和反思,从错误中学习并为未来的步骤进行改进,从而提高最终结果的质量。

- Subgoal and decomposition: The agent breaks down large tasks into smaller, manageable subgoals, enabling efficient handling of complex tasks.

- Memory 记忆

- Short-term memory: I would consider all the in-context learning (See Prompt Engineering ) as utilizing short-term memory of the model to learn.

短期记忆:我会将所有上下文学习(见提示工程)视为模型的短期记忆使用。 - Long-term memory: This provides the agent with the capability to retain and recall (infinite) information over extended periods, often by leveraging an external vector store and fast retrieval.

长期记忆:这为代理提供了保留和回忆(无限)信息的能力,通常通过利用外部向量存储和快速检索来实现。

- Short-term memory: I would consider all the in-context learning (See Prompt Engineering ) as utilizing short-term memory of the model to learn.

- Tool use 工具使用

- The agent learns to call external APIs for extra information that is missing from the model weights (often hard to change after pre-training), including current information, code execution capability, access to proprietary information sources and more.

代理学会调用外部 API 以获取模型权重中缺失的额外信息(通常在预训练后难以更改),包括当前信息、代码执行能力、访问专有信息源等。

- The agent learns to call external APIs for extra information that is missing from the model weights (often hard to change after pre-training), including current information, code execution capability, access to proprietary information sources and more.

Fig. 1. Overview of a LLM-powered autonomous agent system.

图 1. LLM-驱动的自主代理系统概述。

Component One: Planning 组件一:规划

A complicated task usually involves many steps. An agent needs to know what they are and plan ahead.

复杂的任务通常涉及许多步骤。代理需要知道这些步骤并提前规划。

Task Decomposition 任务分解

Chain of thought (CoT; Wei et al. 2022 ) has become a standard prompting technique for enhancing model performance on complex tasks. The model is instructed to “think step by step” to utilize more test-time computation to decompose hard tasks into smaller and simpler steps.

链式思维(CoT;Wei et al. 2022)已成为增强模型在复杂任务上表现的标准提示技术。模型被指示“逐步思考”,利用更多的测试时计算来将困难的任务分解为更小、更简单的步骤。

CoT transforms big tasks into multiple manageable tasks and shed lights into an interpretation of the model’s thinking process.

CoT 将大任务转化为多个可管理的任务,并揭示了模型思考过程的解释。

Tree of Thoughts ( Yao et al. 2023 ) extends CoT by exploring multiple reasoning possibilities at each step. It first decomposes the problem into multiple thought steps and generates multiple thoughts per step, creating a tree structure.

思维树(Yao et al. 2023)通过在每一步探索多种推理可能性来扩展 CoT。它首先将问题分解为多个思维步骤,并为每个步骤生成多个想法,从而创建一个树形结构。

The search process can be BFS (breadth-first search) or DFS (depth-first search) with each state evaluated by a classifier (via a prompt) or majority vote.

搜索过程可以是 BFS(广度优先搜索)或 DFS(深度优先搜索),每种状态可以通过分类器(通过提示)或多数投票来评估。

Task decomposition can be done (1) by LLM with simple prompting like "Steps for XYZ.\n1.", "What are the subgoals for achieving XYZ?", (2) by using task-specific instructions; e.g. "Write a story outline." for writing a novel, or (3) with human inputs.

任务分解可以通过(1) LLM 使用简单的提示如 "Steps for XYZ.\n1." , "What are the subgoals for achieving XYZ?" ,(2) 使用特定任务的指令;例如 "Write a story outline." 写小说,或(3) 通过人类输入来完成。

Another quite distinct approach, LLM+P ( Liu et al. 2023 ), involves relying on an external classical planner to do long-horizon planning. This approach utilizes the Planning Domain Definition Language (PDDL) as an intermediate interface to describe the planning problem.

另一种完全不同的方法,LLM+P(Liu et al. 2023),涉及依赖外部经典规划器进行长期规划。这种方法利用规划领域定义语言(PDDL)作为中间接口来描述规划问题。

In this process, LLM (1) translates the problem into “Problem PDDL”, then (2) requests a classical planner to generate a PDDL plan based on an existing “Domain PDDL”, and finally (3) translates the PDDL plan back into natural language.

在这个过程中,LLM (1) 将问题转换为“Problem PDDL”,然后 (2) 请求经典规划器基于现有的“Domain PDDL”生成一个 PDDL 计划,最后 (3) 将 PDDL 计划转换回自然语言。

Essentially, the planning step is outsourced to an external tool, assuming the availability of domain-specific PDDL and a suitable planner which is common in certain robotic setups but not in many other domains.

本质上,规划步骤被外包给一个外部工具,假设特定领域的 PDDL 和合适的规划器可用,这在某些机器人设置中是常见的,但在许多其他领域却不是。

Self-Reflection 自我反思

Self-reflection is a vital aspect that allows autonomous agents to improve iteratively by refining past action decisions and correcting previous mistakes. It plays a crucial role in real-world tasks where trial and error are inevitable.

自我反思是至关重要的一个方面,它允许自主代理通过改进过去的行动决策并纠正之前的错误来逐步提高。在不可避免地需要尝试和错误的真实世界任务中,它扮演着至关重要的角色。

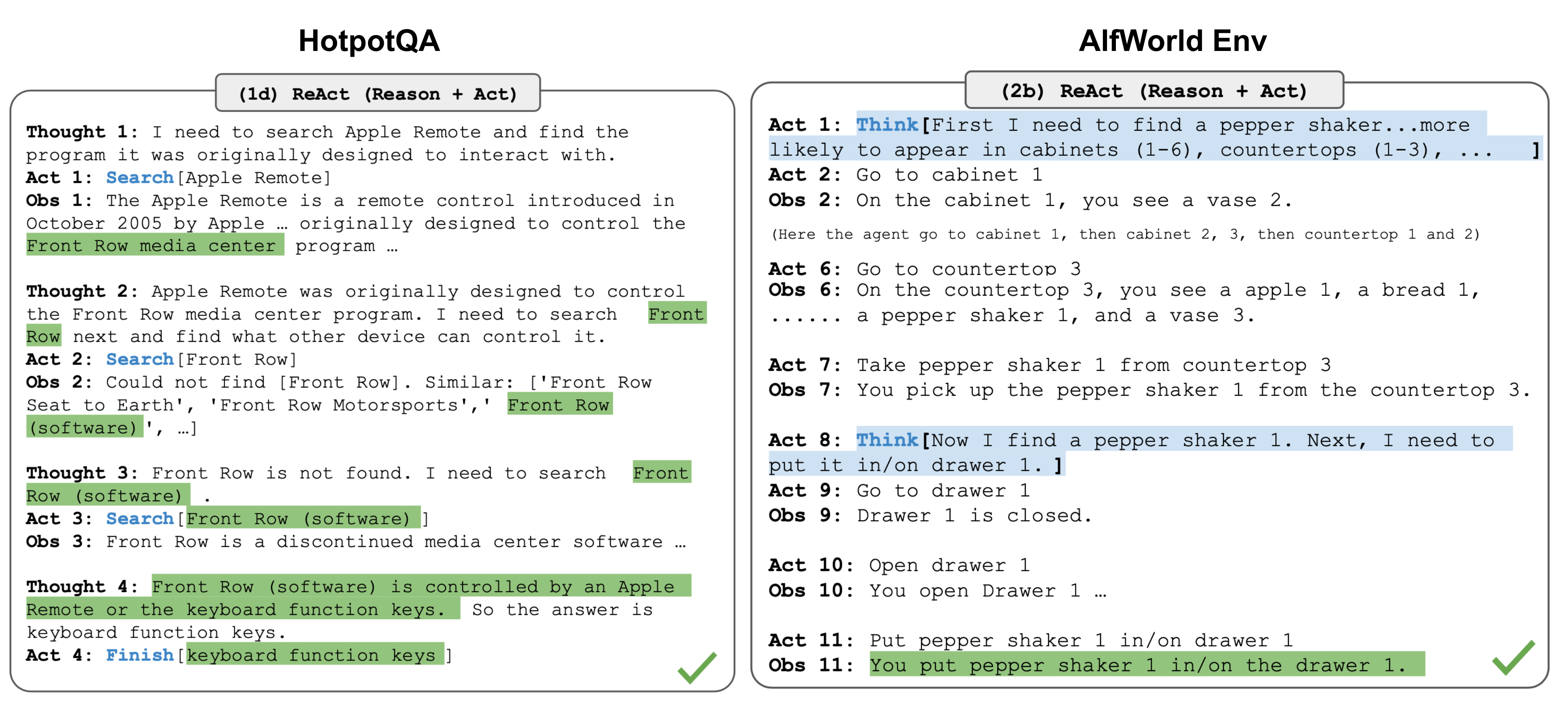

ReAct ( Yao et al. 2023 ) integrates reasoning and acting within LLM by extending the action space to be a combination of task-specific discrete actions and the language space.

ReAct (Yao et al. 2023) 在LLM中通过将行动空间扩展为特定任务离散行动和语言空间的组合,整合了推理和行动。

The former enables LLM to interact with the environment (e.g. use Wikipedia search API), while the latter prompting LLM to generate reasoning traces in natural language.

前者使LLM能够与环境互动(例如使用 Wikipedia 搜索 API),而后者则促使LLM以自然语言生成推理轨迹。

The ReAct prompt template incorporates explicit steps for LLM to think, roughly formatted as:

ReAct 提示模板包含LLM思考的明确步骤,大致格式如下:

Thought: ...

Action: ...

Observation: ...

... (Repeated many times)

Fig. 2. Examples of reasoning trajectories for knowledge-intensive tasks (e.g. HotpotQA, FEVER) and decision-making tasks (e.g. AlfWorld Env, WebShop). (Image source: Yao et al. 2023 ).

图 2. 知识密集型任务(例如 HotpotQA,FEVER)和决策任务(例如 AlfWorld Env,WebShop)的推理轨迹示例。(图片来源:Yao et al. 2023)。

In both experiments on knowledge-intensive tasks and decision-making tasks, ReAct works better than the Act -only baseline where Thought: … step is removed.

在知识密集型任务和决策任务的两个实验中, ReAct 比 Act -只有基线效果更好,其中 Thought: … 步骤被移除。

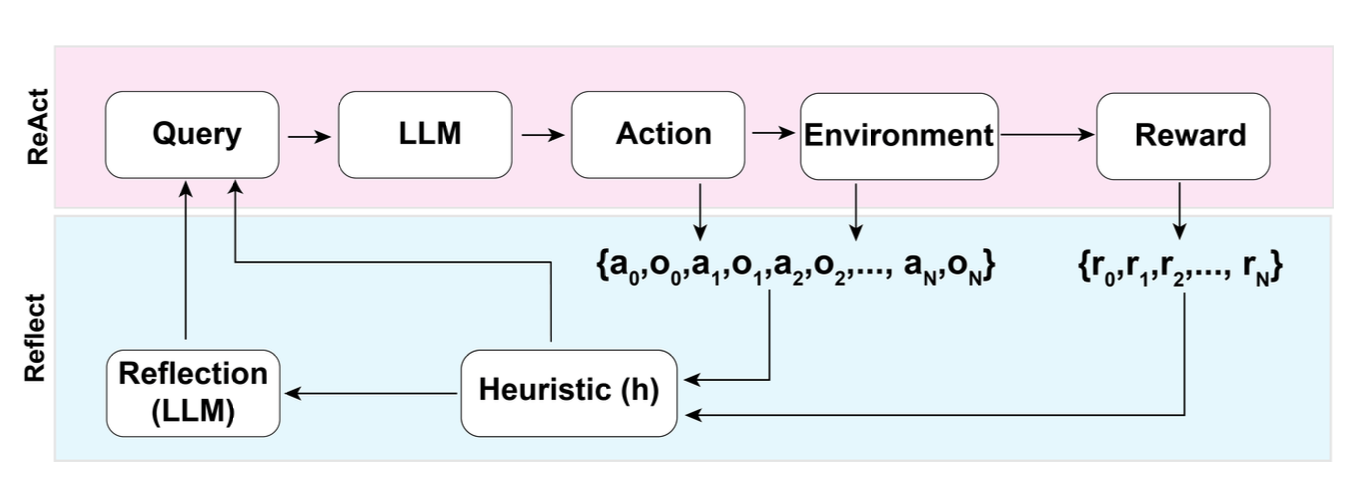

Reflexion ( Shinn & Labash 2023 ) is a framework to equip agents with dynamic memory and self-reflection capabilities to improve reasoning skills.

反射(Shinn & Labash 2023)是一个框架,用于为智能体配备动态记忆和自我反思能力,以提高推理技能。

Reflexion has a standard RL setup, in which the reward model provides a simple binary reward and the action space follows the setup in ReAct where the task-specific action space is augmented with language to enable complex reasoning steps. After each action

反省有一个标准的强化学习设置,在这种设置中,奖励模型提供一个简单的二元奖励,而动作空间遵循 ReAct 中的设置,其中的任务特定动作空间通过添加语言来增强,以启用复杂的推理步骤。在每次执行动作

Fig. 3. Illustration of the Reflexion framework. (Image source: Shinn & Labash, 2023 )

Fig. 3. 反省框架的示例图。(图片来源:Shinn & Labash, 2023)

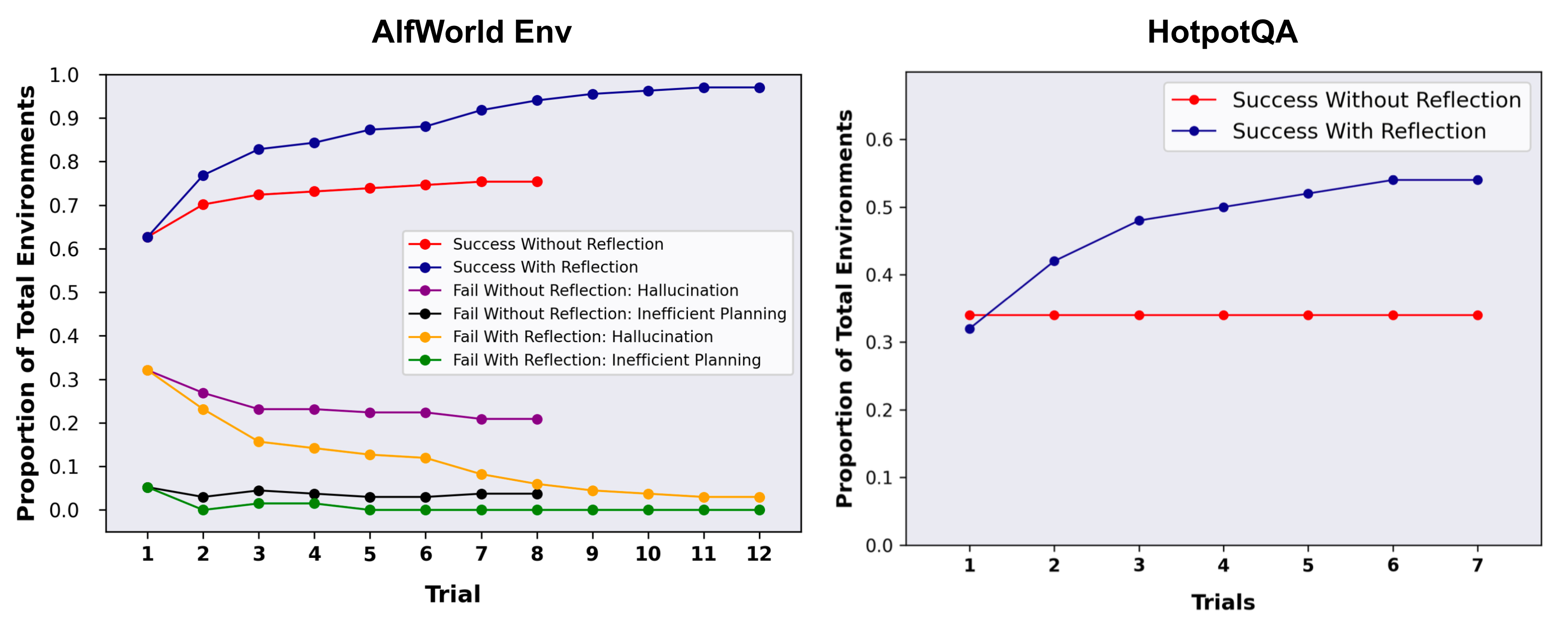

The heuristic function determines when the trajectory is inefficient or contains hallucination and should be stopped. Inefficient planning refers to trajectories that take too long without success.

启发式函数确定轨迹是否无效或包含幻觉,需要停止。无效规划指的是花费了很长时间但没有成功的情况。

Hallucination is defined as encountering a sequence of consecutive identical actions that lead to the same observation in the environment.

幻觉被定义为遇到一系列连续相同的动作,这些动作导致环境中的相同观察结果。

Self-reflection is created by showing two-shot examples to LLM and each example is a pair of (failed trajectory, ideal reflection for guiding future changes in the plan).

自我反思通过向LLM展示两例示例创建,每个示例是一对(失败的轨迹,用于指导未来计划更改的理想反思)。

Then reflections are added into the agent’s working memory, up to three, to be used as context for querying LLM.

然后将反思添加到代理的工作记忆中,最多三个,作为查询LLM时的上下文。

Fig. 4. Experiments on AlfWorld Env and HotpotQA. Hallucination is a more common failure than inefficient planning in AlfWorld. (Image source: Shinn & Labash, 2023 )

Fig. 4. AlfWorld 环境和 HotpotQA 上的实验。在 AlfWorld 中,幻觉比无效规划更常见的失败原因。(Image source: Shinn & Labash, 2023)

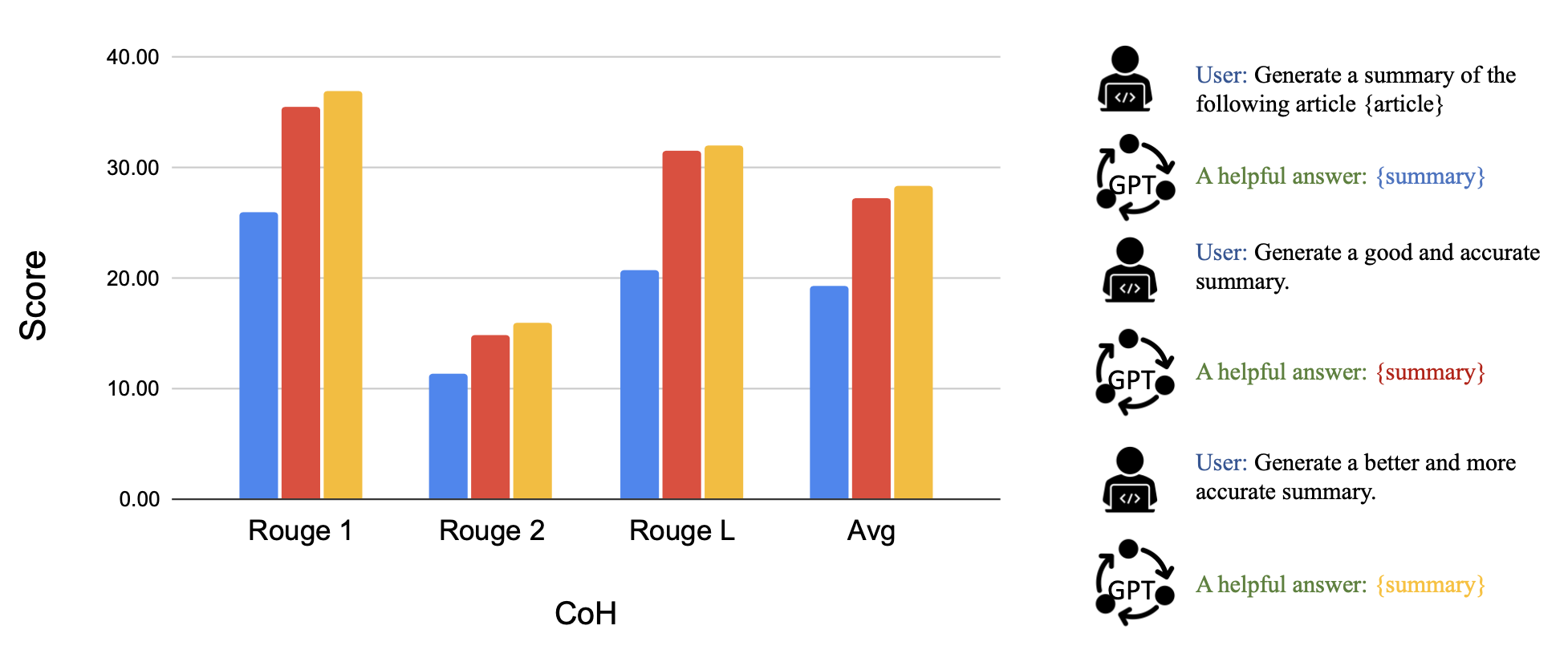

Chain of Hindsight (CoH; Liu et al. 2023 ) encourages the model to improve on its own outputs by explicitly presenting it with a sequence of past outputs, each annotated with feedback. Human feedback data is a collection of

回溯链(CoH;Liu 等人,2023)鼓励模型通过显式地向其呈现一系列过去的输出来改进自己的输出,每个输出都附有反馈。人类反馈数据是

To avoid overfitting, CoH adds a regularization term to maximize the log-likelihood of the pre-training dataset. To avoid shortcutting and copying (because there are many common words in feedback sequences), they randomly mask 0% - 5% of past tokens during training.

为了防止过拟合,CoH 在最大化预训练数据集的对数似然时添加了一个正则化项。为了避免捷径和复制(因为反馈序列中有很多常见的词),他们在训练过程中随机遮蔽了 0%-5%的过去词元。

The training dataset in their experiments is a combination of WebGPT comparisons, summarization from human feedback and human preference dataset.

他们的实验使用的数据集是 WebGPT 比较、基于人类反馈的总结以及人类偏好数据集的组合。

Fig. 5. After fine-tuning with CoH, the model can follow instructions to produce outputs with incremental improvement in a sequence. (Image source: Liu et al. 2023 )

图 5. 经过 CoH 微调后,模型可以遵循指令按顺序生成输出,并且输出质量逐步提升。(图像来源:刘等,2023)

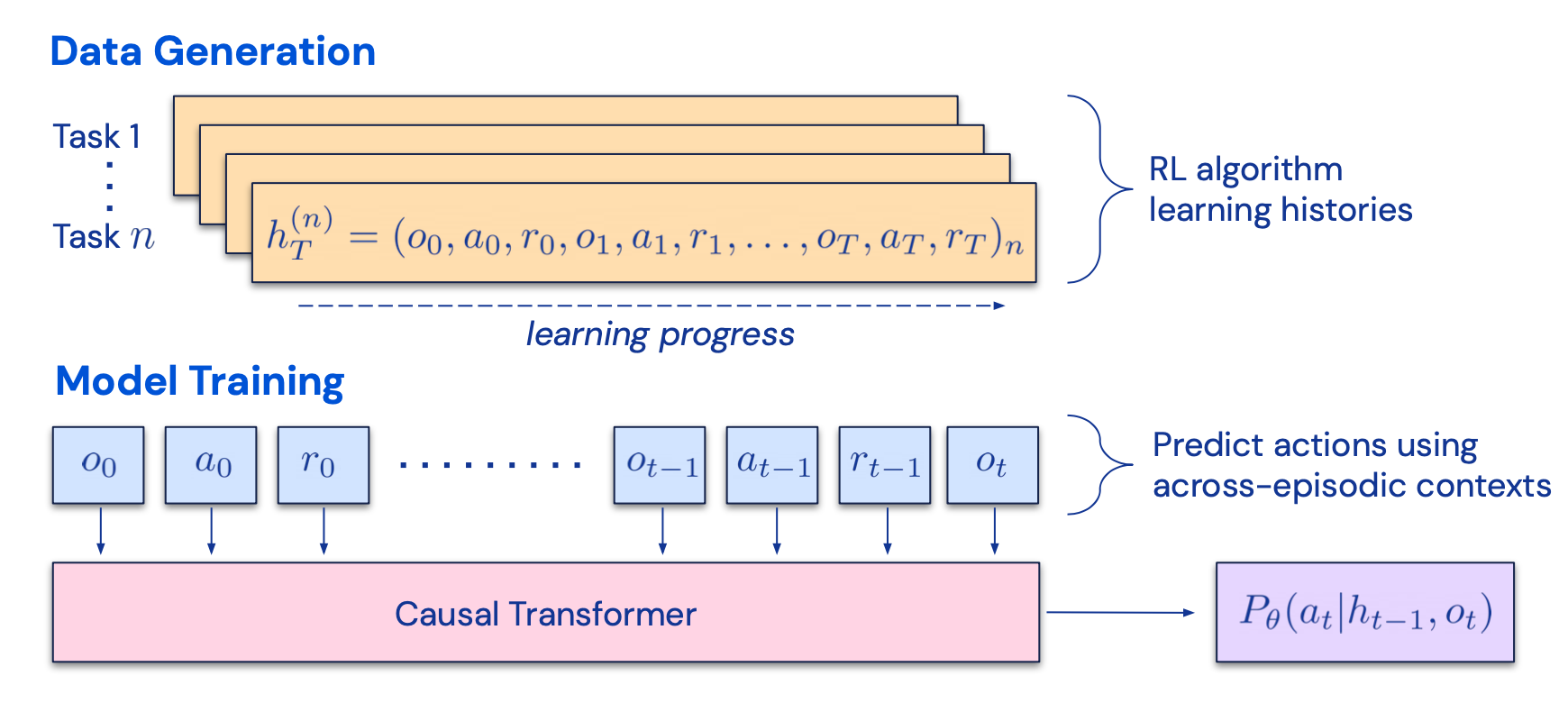

The idea of CoH is to present a history of sequentially improved outputs in context and train the model to take on the trend to produce better outputs. Algorithm Distillation (AD; Laskin et al. 2023 ) applies the same idea to cross-episode trajectories in reinforcement learning tasks, where an algorithm is encapsulated in a long history-conditioned policy. Considering that an agent interacts with the environment many times and in each episode the agent gets a little better, AD concatenates this learning history and feeds that into the model.

CoH 的理念是展示逐步改进的输出历史,并通过训练模型来遵循这种趋势以产生更好的输出。算法蒸馏(AD;Laskin et al. 2023)将相同的理念应用于强化学习任务中的跨集轨迹,其中算法被封装在一个长期历史条件策略中。考虑到一个代理会与环境进行多次交互,在每次 episode 中代理都会稍微变得更好,AD 将这种学习历史连接起来并将其输入到模型中。

Hence we should expect the next predicted action to lead to better performance than previous trials. The goal is to learn the process of RL instead of training a task-specific policy itself.

因此,我们应期望下一个预测动作比之前的试验表现更好。目标是学习 RL 过程而不是训练特定任务的策略本身。

Fig. 6. Illustration of how Algorithm Distillation (AD) works.

Fig. 6. 算法蒸馏(AD)的工作原理示意图。

(Image source: Laskin et al. 2023 ).

(图片来源:Laskin 等,2023)。

The paper hypothesizes that any algorithm that generates a set of learning histories can be distilled into a neural network by performing behavioral cloning over actions. The history data is generated by a set of source policies, each trained for a specific task.

该论文假设,任何生成一组学习历史的算法都可以通过行为克隆动作来提炼成一个神经网络。这些历史数据是由一组源策略生成的,每种策略都针对特定任务进行了训练。

At the training stage, during each RL run, a random task is sampled and a subsequence of multi-episode history is used for training, such that the learned policy is task-agnostic.

在训练阶段,每次 RL 运行中,都会随机选择一个任务,并使用多集多集历史数据进行训练,从而使学习到的策略具有任务无关性。

In reality, the model has limited context window length, so episodes should be short enough to construct multi-episode history. Multi-episodic contexts of 2-4 episodes are necessary to learn a near-optimal in-context RL algorithm.

实际上,模型的上下文窗口长度有限,因此每个 episode 应该足够短,以便构建多集历史数据。需要 2-4 集的多集上下文来学习接近最优的上下文内 RL 算法。

The emergence of in-context RL requires long enough context.

在上下文 RL 的出现中,需要足够长的上下文。

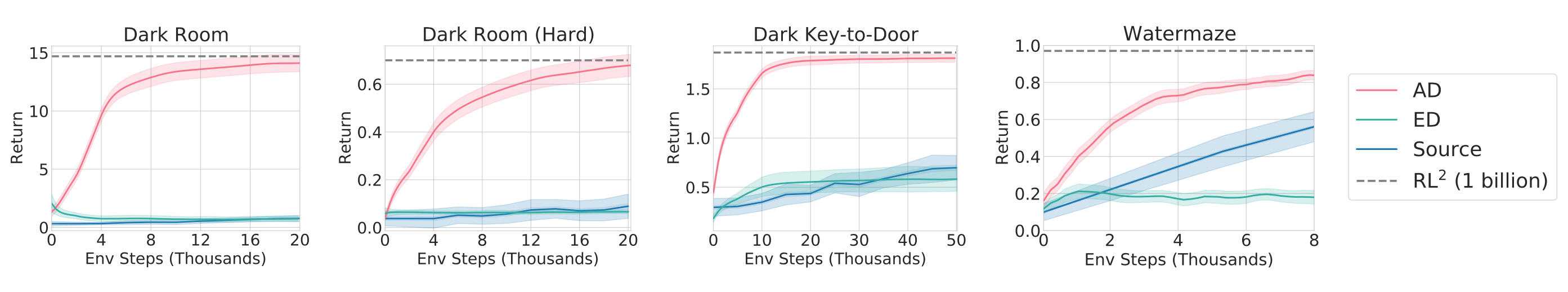

In comparison with three baselines, including ED (expert distillation, behavior cloning with expert trajectories instead of learning history), source policy (used for generating trajectories for distillation by UCB ), RL^2 ( Duan et al. 2017; used as upper bound since it needs online RL), AD demonstrates in-context RL with performance getting close to RL^2 despite only using offline RL and learns much faster than other baselines.

与三个基线相比,包括 ED(专家蒸馏,行为克隆使用专家轨迹而不是学习历史),源策略(用于通过 UCB 生成蒸馏轨迹),以及 RL^2(Duan 等人,2017;用作上限基准,因为它需要在线 RL),AD 展示了上下文 RL,其性能接近 RL^2,尽管仅使用了离线 RL,并且比其他基线学习得更快。

When conditioned on partial training history of the source policy, AD also improves much faster than ED baseline.

当基于源策略的部分训练历史时,AD 也比 ED 基线更快地改进。

Fig. 7. Comparison of AD, ED, source policy and RL^2 on environments that require memory and exploration. Only binary reward is assigned. The source policies are trained with A3C for “dark” environments and DQN for watermaze.

图 7. 在需要记忆和探索的环境中,AD、ED、源策略和 RL^2 的比较。仅分配二元奖励。源策略在“黑暗”环境中使用 A3C 训练,在水迷宫环境中使用 DQN 训练。

(Image source: Laskin et al. 2023 )

(图片来源:Laskin et al. 2023)

Component Two: Memory 组件二:记忆

(Big thank you to ChatGPT for helping me draft this section. I’ve learned a lot about the human brain and data structure for fast MIPS in my conversations with ChatGPT.)

(非常感谢 ChatGPT 帮我起草这一部分。我在与 ChatGPT 的对话中学到了很多关于人脑和快速 MIPS 的数据结构知识。)

Types of Memory 记忆类型

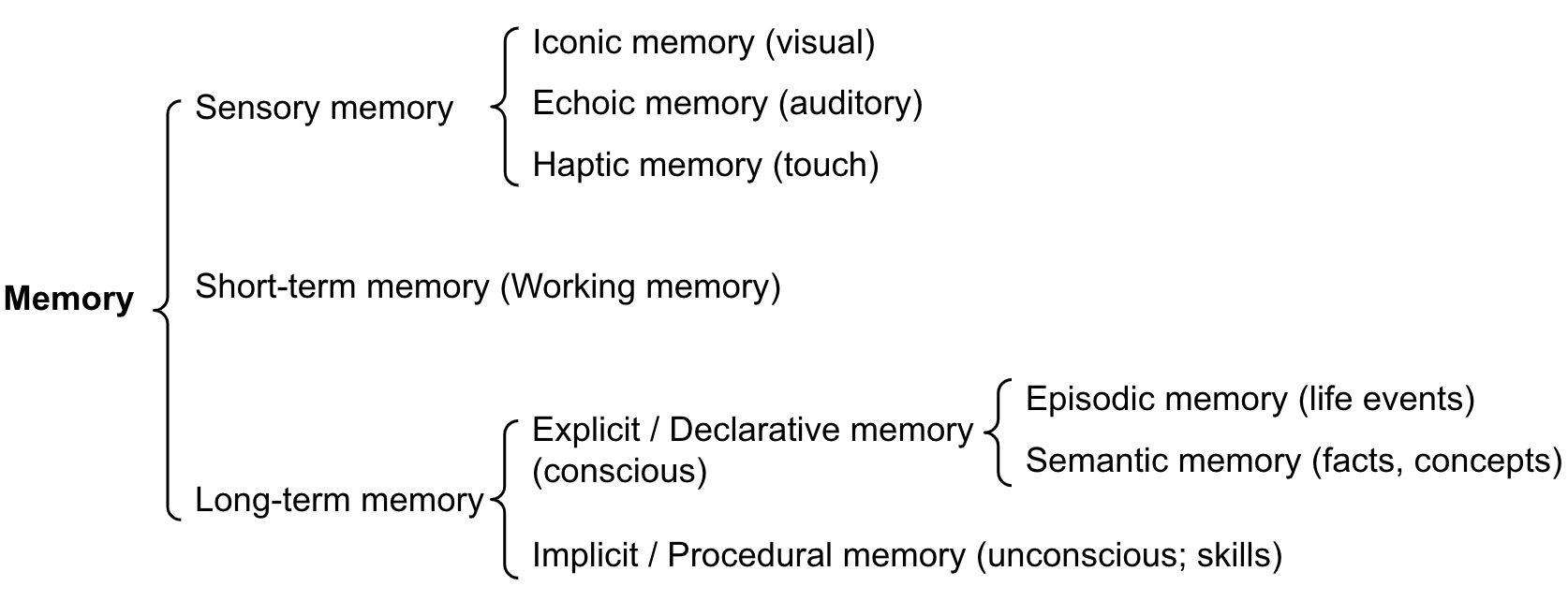

Memory can be defined as the processes used to acquire, store, retain, and later retrieve information. There are several types of memory in human brains.

记忆可以定义为获取、存储、保留以及后来检索信息的过程。人类大脑中有几种类型的记忆。

- Sensory Memory: This is the earliest stage of memory, providing the ability to retain impressions of sensory information (visual, auditory, etc) after the original stimuli have ended. Sensory memory typically only lasts for up to a few seconds.

感觉记忆:这是记忆的最早阶段,能够在原始刺激结束后保留感觉信息(视觉、听觉等)的印象。感觉记忆通常只持续几秒钟。

Subcategories include iconic memory (visual), echoic memory (auditory), and haptic memory (touch).

子类别包括视觉记忆(视觉)、听觉记忆(听觉)和触觉记忆(触觉)。 - Short-Term Memory (STM) or Working Memory: It stores information that we are currently aware of and needed to carry out complex cognitive tasks such as learning and reasoning. Short-term memory is believed to have the capacity of about 7 items ( Miller 1956 ) and lasts for 20-30 seconds.

短时记忆(STM)或工作记忆:它存储我们当前意识到的信息,并用于执行复杂的认知任务,如学习和推理。短时记忆被认为容量约为 7 个项目(Miller 1956),持续时间为 20-30 秒。 - Long-Term Memory (LTM): Long-term memory can store information for a remarkably long time, ranging from a few days to decades, with an essentially unlimited storage capacity. There are two subtypes of LTM:

长时记忆(LTM):长时记忆可以存储信息长达数天到数十年,具有几乎无限的存储容量。长时记忆有两种亚型:- Explicit / declarative memory: This is memory of facts and events, and refers to those memories that can be consciously recalled, including episodic memory (events and experiences) and semantic memory (facts and concepts).

显性/声明性记忆:这是关于事实和事件的记忆,指的是可以有意识回忆起来的记忆,包括情景记忆(事件和经历)和语义记忆(事实和概念)。 - Implicit / procedural memory: This type of memory is unconscious and involves skills and routines that are performed automatically, like riding a bike or typing on a keyboard.

implicit/程序性记忆:这种记忆是无意识的,涉及像骑自行车或在键盘上打字这样的技能和常规操作。

- Explicit / declarative memory: This is memory of facts and events, and refers to those memories that can be consciously recalled, including episodic memory (events and experiences) and semantic memory (facts and concepts).

Fig. 8. Categorization of human memory.

图 8. 人类记忆的分类。

We can roughly consider the following mappings:

我们可以大致考虑以下映射:

- Sensory memory as learning embedding representations for raw inputs, including text, image or other modalities;

感觉记忆作为学习嵌入表示的原始输入,包括文本、图像或其他模态; - Short-term memory as in-context learning. It is short and finite, as it is restricted by the finite context window length of Transformer.

短时记忆作为上下文学习。它是短暂且有限的,因为它受到 Transformer 上下文窗口长度的限制。 - Long-term memory as the external vector store that the agent can attend to at query time, accessible via fast retrieval.

长时记忆作为代理在查询时可以访问的外部向量存储,可通过快速检索访问。

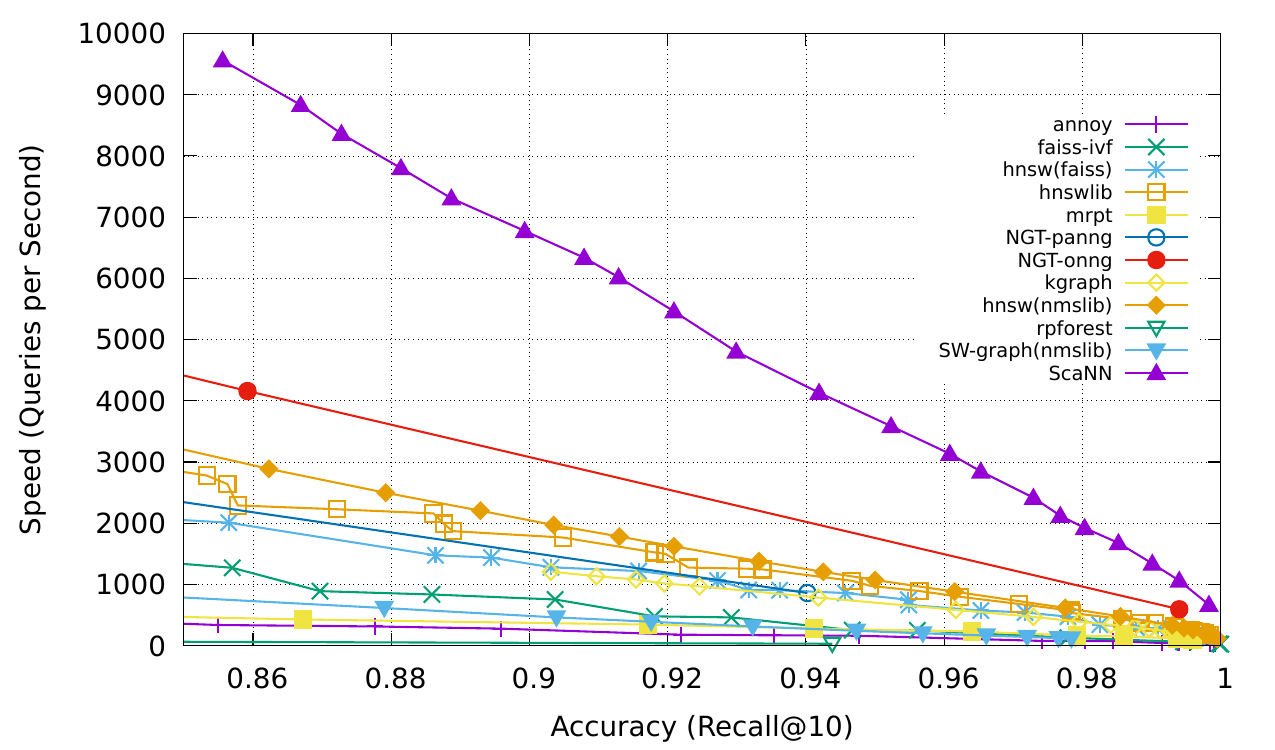

Maximum Inner Product Search (MIPS)最大内积搜索(MIPS)

The external memory can alleviate the restriction of finite attention span. A standard practice is to save the embedding representation of information into a vector store database that can support fast maximum inner-product search ( MIPS ). To optimize the retrieval speed, the common choice is the approximate nearest neighbors (ANN) algorithm to return approximately top k nearest neighbors to trade off a little accuracy lost for a huge speedup.

外部内存可以缓解有限注意力范围的限制。一种标准做法是将信息的嵌入表示保存到向量存储数据库中,该数据库可以支持快速最大内积搜索(MIPS)。为了优化检索速度,常见的选择是近似最近邻(ANN)算法,以牺牲一点点准确性为代价换取巨大的速度提升。

A couple common choices of ANN algorithms for fast MIPS:

用于快速 MIPS 的几种常见 ANN 算法:

- LSH (Locality-Sensitive Hashing): It introduces a hashing function such that similar input items are mapped to the same buckets with high probability, where the number of buckets is much smaller than the number of inputs.

LSH(局部敏感哈希):它引入了一个哈希函数,使得相似的输入项以高概率映射到同一个桶中,其中桶的数量远小于输入的数量。 - ANNOY (Approximate Nearest Neighbors Oh Yeah): The core data structure are random projection trees, a set of binary trees where each non-leaf node represents a hyperplane splitting the input space into half and each leaf stores one data point. Trees are built independently and at random, so to some extent, it mimics a hashing function.

ANNOY(Approximate Nearest Neighbors Oh Yeah):其核心数据结构是随机投影树,一组二叉树,其中每个非叶节点代表一个超平面,将输入空间分为两半,每个叶节点存储一个数据点。树是独立且随机构建的,因此在某种程度上,它模拟了一个哈希函数。

ANNOY search happens in all the trees to iteratively search through the half that is closest to the query and then aggregates the results. The idea is quite related to KD tree but a lot more scalable.

ANNOY 搜索会在所有树中进行迭代搜索,通过查询最接近的那一半进行搜索,然后汇总结果。这个想法与 KD 树非常相似,但更具扩展性。 - HNSW (Hierarchical Navigable Small World): It is inspired by the idea of small world networks where most nodes can be reached by any other nodes within a small number of steps; e.g. “six degrees of separation” feature of social networks. HNSW builds hierarchical layers of these small-world graphs, where the bottom layers contain the actual data points.

HNSW(Hierarchical Navigable Small World):它受到小世界网络的启发,其中大多数节点可以通过少量步骤到达其他节点;例如,社交网络中的“六度分隔”特性。HNSW 构建了这些小世界图的层次结构,底层包含实际的数据点。

The layers in the middle create shortcuts to speed up search. When performing a search, HNSW starts from a random node in the top layer and navigates towards the target. When it can’t get any closer, it moves down to the next layer, until it reaches the bottom layer.

中间的层次创建捷径以加快搜索速度。在进行搜索时,HNSW 从顶层的随机节点开始,导航向目标。当无法再接近目标时,它会向下移动到下一层,直到到达底层。

Each move in the upper layers can potentially cover a large distance in the data space, and each move in the lower layers refines the search quality.

在上层的每次移动都可能在数据空间中覆盖较大的距离,而在下层的每次移动则会细化搜索质量。 - FAISS (Facebook AI Similarity Search): It operates on the assumption that in high dimensional space, distances between nodes follow a Gaussian distribution and thus there should exist clustering of data points. FAISS applies vector quantization by partitioning the vector space into clusters and then refining the quantization within clusters.

FAISS(Facebook AI 相似性搜索):它假设在高维空间中,节点之间的距离遵循高斯分布,因此数据点应该存在聚类现象。FAISS 通过将向量空间划分为簇,然后在簇内进行量化细化来应用向量量化。

Search first looks for cluster candidates with coarse quantization and then further looks into each cluster with finer quantization.

首先搜索粗量化后的候选簇,然后在每个簇中进行更精细的量化。 - ScaNN (Scalable Nearest Neighbors): The main innovation in ScaNN is anisotropic vector quantization. It quantizes a data point

to such that the inner product is as similar to the original distance of as possible, instead of picking the closet quantization centroid points.

ScaNN(可扩展最近邻搜索):ScaNN 的主要创新是各向异性向量量化。它将数据点量化为 ,使得内积 尽可能与原始距离 相似,而不是选择最近的量化中心点。

Fig. 9. Comparison of MIPS algorithms, measured in recall@10. (Image source: Google Blog, 2020 )

图 9. MIPS 算法的比较,以 recall@10 衡量。(图片来源:Google 博客,2020 年)

Check more MIPS algorithms and performance comparison in ann-benchmarks.com.

在 ann-benchmarks.com 上查看更多的 MIPS 算法和性能比较。

Component Three: Tool Use组件三:工具使用

Tool use is a remarkable and distinguishing characteristic of human beings. We create, modify and utilize external objects to do things that go beyond our physical and cognitive limits. Equipping LLMs with external tools can significantly extend the model capabilities.

工具使用是人类的一个显著特征。我们创造、修改和利用外部物体来完成超出我们身体和认知极限的事情。为LLMs配备外部工具可以显著扩展模型的能力。

Fig. 10. A picture of a sea otter using rock to crack open a seashell, while floating in the water. While some other animals can use tools, the complexity is not comparable with humans. (Image source: Animals using tools )

图 10. 一只海獭在水中使用石头敲开贝壳的照片。虽然其他一些动物也会使用工具,但复杂性与人类无法相比。(图片来源:动物使用工具)

MRKL ( Karpas et al. 2022 ), short for “Modular Reasoning, Knowledge and Language”, is a neuro-symbolic architecture for autonomous agents.

MRKL(卡帕斯等,2022 年),简称“模块化推理、知识和语言”,是一种自主代理的神经符号架构。

A MRKL system is proposed to contain a collection of “expert” modules and the general-purpose LLM works as a router to route inquiries to the best suitable expert module.

提出的 MRKL 系统包含一组“专家”模块,通用的LLM作为路由器,将询问路由到最适合的专家模块。

These modules can be neural (e.g. deep learning models) or symbolic (e.g. math calculator, currency converter, weather API).

这些模块可以是神经的(例如,深度学习模型)或符号的(例如,计算器,货币转换器,天气 API)。

They did an experiment on fine-tuning LLM to call a calculator, using arithmetic as a test case.

他们将LLM微调以调用计算器进行了实验,以算术作为测试案例。

Their experiments showed that it was harder to solve verbal math problems than explicitly stated math problems because LLMs (7B Jurassic1-large model) failed to extract the right arguments for the basic arithmetic reliably.

他们的实验表明,解决口头数学问题比解决明确陈述的数学问题更难,因为LLMs(7B Jurassic1-large 模型)无法可靠地提取基本算术所需的参数。

The results highlight when the external symbolic tools can work reliably, knowing when to and how to use the tools are crucial, determined by the LLM capability.

这些结果突显了当外部符号工具可以可靠地工作时,知道何时以及如何使用这些工具是至关重要的,这取决于LLM的能力。

Both TALM (Tool Augmented Language Models; Parisi et al. 2022 ) and Toolformer ( Schick et al. 2023 ) fine-tune a LM to learn to use external tool APIs. The dataset is expanded based on whether a newly added API call annotation can improve the quality of model outputs. See more details in the “External APIs” section of Prompt Engineering.

无论是 TALM(工具增强语言模型;Parisi 等人,2022)还是 Toolformer(Schick 等人,2023),都会对 LM 进行微调,使其学习使用外部工具 API。数据集是基于新增的 API 调用注释是否能提高模型输出质量来扩展的。更多细节请参见提示工程中的“外部 API”部分。

ChatGPT Plugins and OpenAI API function calling are good examples of LLMs augmented with tool use capability working in practice. The collection of tool APIs can be provided by other developers (as in Plugins) or self-defined (as in function calls).

ChatGPT 插件和 OpenAI API 函数调用是LLMs增强工具使用能力在实践中工作的良好示例。工具 API 集合可以由其他开发人员提供(如插件),也可以由自己定义(如函数调用)。

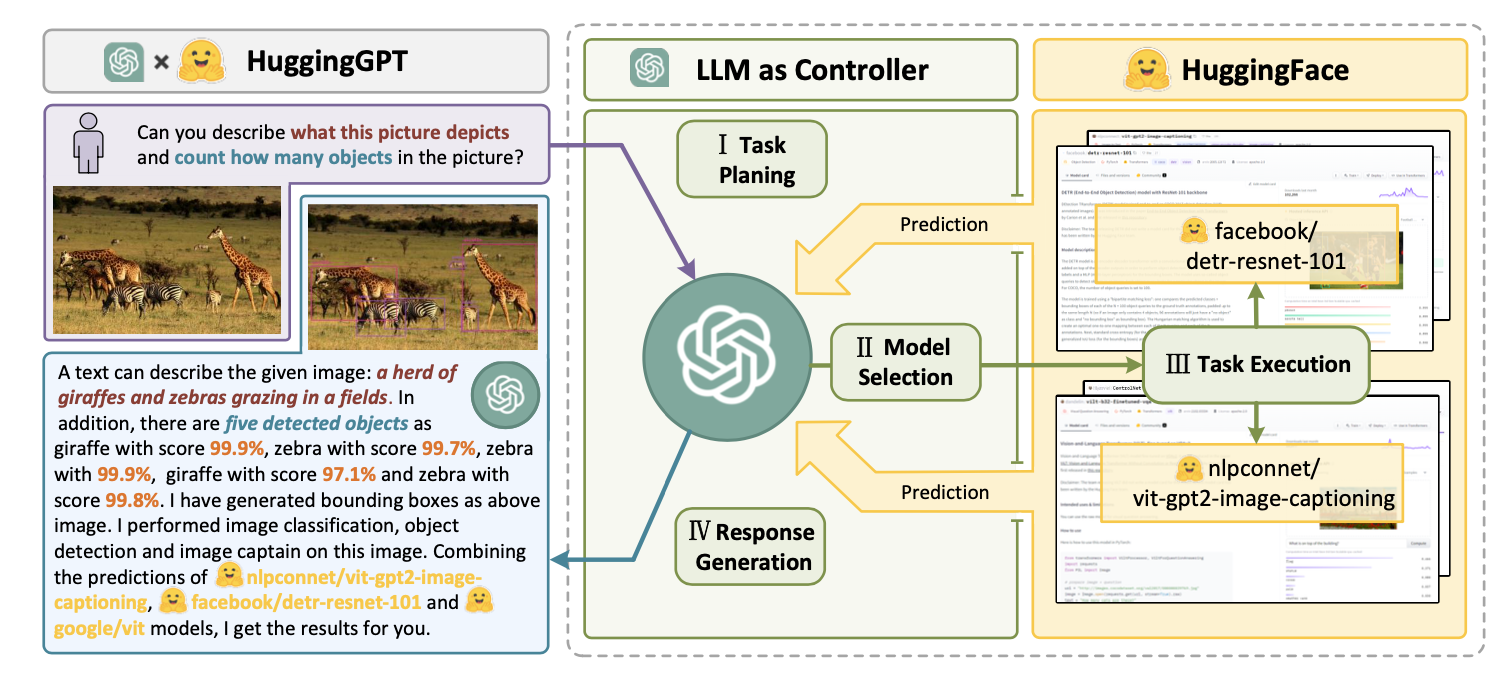

HuggingGPT ( Shen et al. 2023 ) is a framework to use ChatGPT as the task planner to select models available in HuggingFace platform according to the model descriptions and summarize the response based on the execution results.

HuggingGPT(沈等,2023)是一个框架,使用 ChatGPT 作为任务规划器,根据模型描述在 HuggingFace 平台上选择可用的模型,并根据执行结果总结响应。

Fig. 11. Illustration of how HuggingGPT works. (Image source: Shen et al. 2023 )

Fig. 11. HuggingGPT 工作原理的插图。(图片来源:沈等,2023)

The system comprises of 4 stages:

该系统包括四个阶段:

(1) Task planning: LLM works as the brain and parses the user requests into multiple tasks. There are four attributes associated with each task: task type, ID, dependencies, and arguments. They use few-shot examples to guide LLM to do task parsing and planning.

(1) 任务规划:LLM充当大脑并解析用户请求为多个任务。每个任务有四个属性:任务类型、ID、依赖关系和参数。他们使用少量示例来指导LLM进行任务解析和规划。

Instruction:指令:

The AI assistant can parse user input to several tasks: [{“task”: task, “id”, task_id, “dep”: dependency_task_ids, “args”: {“text”: text, “image”: URL, “audio”: URL, “video”: URL}}].

AI 助手可以解析用户输入为多个任务:[{“task”: task, “id”, task_id, “dep”: dependency_task_ids, “args”: {“text”: text, “image”: URL, “audio”: URL, “video”: URL}}]。

The “dep” field denotes the id of the previous task which generates a new resource that the current task relies on. A special tag ” -task_id” refers to the generated text image, audio and video in the dependency task with id as task_id. The task MUST be selected from the following options: {{ Available Task List }}. There is a logical relationship between tasks, please note their order.

”dep”字段表示依赖于生成新资源的前一个任务的 id。特殊标签”-task_id”指的是依赖任务中 id 为 task_id 的生成的文本、图像、音频和视频。任务必须从以下选项中选择:{{ 可用任务列表 }}。任务之间存在逻辑关系,请注意它们的顺序。

If the user input can’t be parsed, you need to reply empty JSON. Here are several cases for your reference: {{ Demonstrations }}. The chat history is recorded as {{ Chat History }}.

如果用户输入无法解析,您需要回复空的 JSON。这里有几个参考案例:{{ 演示 }}。聊天记录作为{{ 聊天记录 }}。

From this chat history, you can find the path of the user-mentioned resources for your task planning.

从这次对话历史中,你可以找到用户提到的资源路径,以便于你的任务规划。

(2) Model selection: LLM distributes the tasks to expert models, where the request is framed as a multiple-choice question. LLM is presented with a list of models to choose from. Due to the limited context length, task type based filtration is needed.

(2) 模型选择:LLM 将任务分配给专家模型,请求被构造成多项选择题。LLM 被提供一个可供选择的模型列表。由于上下文长度有限,需要基于任务类型进行过滤。

Instruction:指令:

Given the user request and the call command, the AI assistant helps the user to select a suitable model from a list of models to process the user request. The AI assistant merely outputs the model id of the most appropriate model.

根据用户请求和调用命令,AI 助手帮助用户从模型列表中选择一个合适的模型来处理用户请求。AI 助手仅输出最合适的模型的模型 id。

The output must be in a strict JSON format: “id”: “id”, “reason”: “your detail reason for the choice”. We have a list of models for you to choose from {{ Candidate Models }}. Please select one model from the list.

输出必须是严格的 JSON 格式:“id”: “id”, “reason”: “您选择该模型的详细原因”。您可以从以下候选模型列表中选择一个模型:{{ 候选模型 }}。请从列表中选择一个模型。

(3) Task execution: Expert models execute on the specific tasks and log results.

(3) 任务执行:专家模型执行特定任务并记录结果。

Instruction:指令:

With the input and the inference results, the AI assistant needs to describe the process and results. The previous stages can be formed as - User Input: {{ User Input }}, Task Planning: {{ Tasks }}, Model Selection: {{ Model Assignment }}, Task Execution: {{ Predictions }}.

根据输入和推理结果,AI 助手需要描述过程和结果。之前的阶段可以形成如下 - 用户输入:{{ 用户输入 }}, 任务规划:{{ 任务规划 }}, 模型选择:{{ 模型分配 }}, 任务执行:{{ 预测 }}。

You must first answer the user’s request in a straightforward manner. Then describe the task process and show your analysis and model inference results to the user in the first person. If inference results contain a file path, must tell the user the complete file path.

首先,你需要以直截了当的方式回答用户的问题。然后,描述任务过程,并向用户展示你的分析和模型推理结果,使用第一人称。如果推理结果包含文件路径,必须告诉用户完整的文件路径。

(4) Response generation: LLM receives the execution results and provides summarized results to users.

(4) 响应生成:LLM 接收执行结果并向用户提供总结结果。

To put HuggingGPT into real world usage, a couple challenges need to solve: (1) Efficiency improvement is needed as both LLM inference rounds and interactions with other models slow down the process; (2) It relies on a long context window to communicate over complicated task content; (3) Stability improvement of LLM outputs and external model services.

要将 HuggingGPT 应用于实际场景,需要解决几个挑战:(1) 提高效率,因为 LLM 的推理轮次和与其他模型的交互会减慢过程;(2) 它依赖于长上下文窗口来传达复杂的任务内容;(3) 改善 LLM 输出的稳定性和外部模型服务。

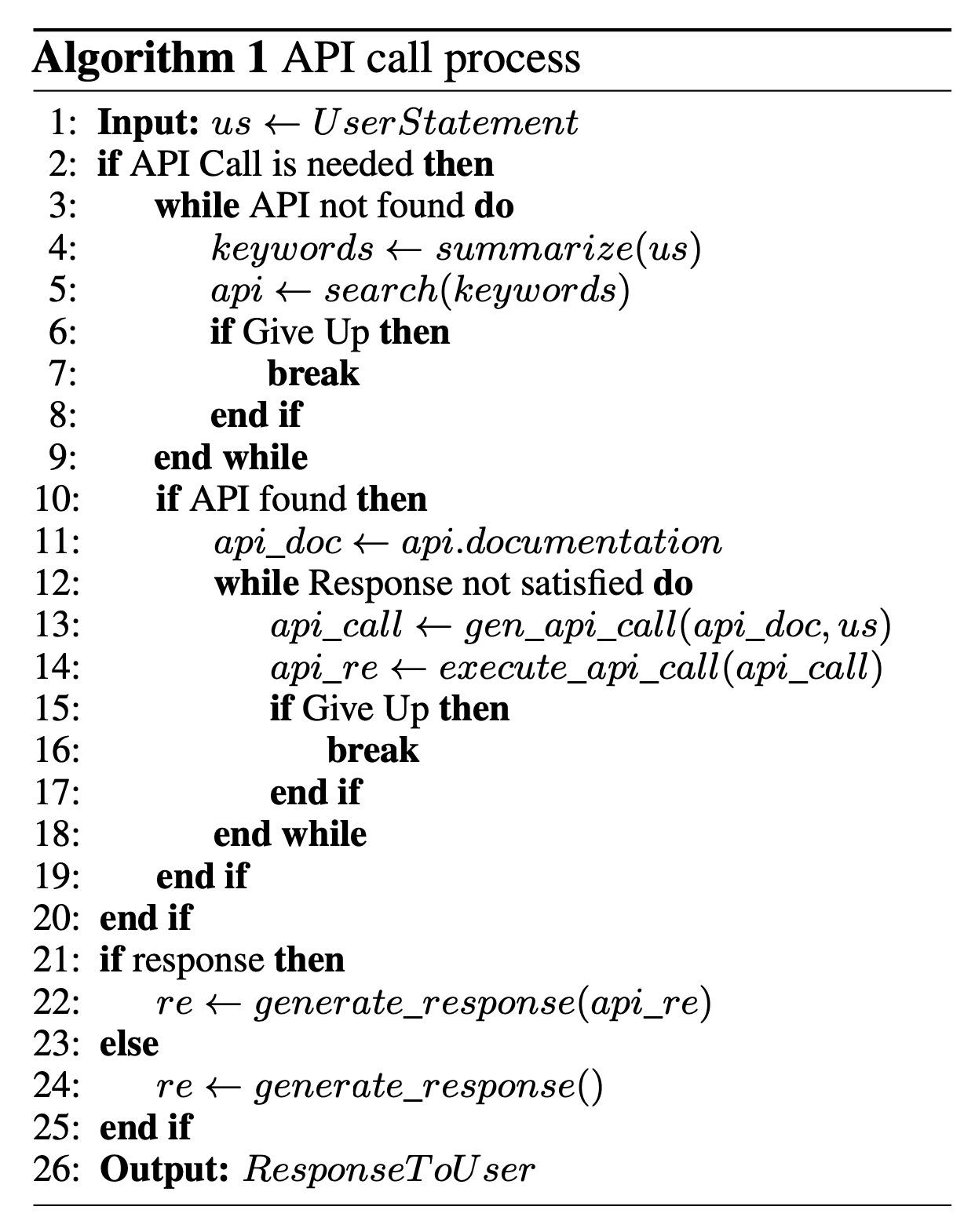

API-Bank ( Li et al. 2023 ) is a benchmark for evaluating the performance of tool-augmented LLMs. It contains 53 commonly used API tools, a complete tool-augmented LLM workflow, and 264 annotated dialogues that involve 568 API calls.

API-Bank (Li et al. 2023) 是一个用于评估工具增强的 LLMs 性能的基准。它包含 53 个常用 API 工具、一个完整的工具增强 LLM 工作流以及 264 个涉及 568 次 API 调用的标注对话。

The selection of APIs is quite diverse, including search engines, calculator, calendar queries, smart home control, schedule management, health data management, account authentication workflow and more.

选择的 API 种类非常多样,包括搜索引擎、计算器、日程查询、智能家居控制、日程管理、健康管理数据管理、账户认证工作流等。

Because there are a large number of APIs, LLM first has access to API search engine to find the right API to call and then uses the corresponding documentation to make a call.

由于 API 数量众多,LLM 首先使用 API 搜索引擎找到合适的 API,然后使用相应的文档进行调用。

Fig. 12. Pseudo code of how LLM makes an API call in API-Bank. (Image source: Li et al. 2023 )

Fig. 12. API-Bank 中LLM如何进行 API 调用的伪代码。(图片来源:Li et al. 2023)

In the API-Bank workflow, LLMs need to make a couple of decisions and at each step we can evaluate how accurate that decision is. Decisions include:

在 API-Bank 流程中,LLMs需要做出多个决策,在每一步我们都可以评估决策的准确性。决策包括:

- Whether an API call is needed.

是否需要进行 API 调用。 - Identify the right API to call: if not good enough, LLMs need to iteratively modify the API inputs (e.g. deciding search keywords for Search Engine API).

确定要调用的 API:如果不合适,LLMs需要迭代修改 API 输入(例如,为搜索引擎 API 决定搜索关键词)。 - Response based on the API results: the model can choose to refine and call again if results are not satisfied.

基于 API 结果的响应:如果结果不满意,模型可以选择优化并再次调用。

This benchmark evaluates the agent’s tool use capabilities at three levels:

该基准评估代理的工具使用能力分为三个层次:

- Level-1 evaluates the ability to call the API. Given an API’s description, the model needs to determine whether to call a given API, call it correctly, and respond properly to API returns.

第一级评估 API 调用能力。给定一个 API 的描述,模型需要确定是否调用给定的 API,正确调用它,并妥善回应 API 返回的结果。 - Level-2 examines the ability to retrieve the API. The model needs to search for possible APIs that may solve the user’s requirement and learn how to use them by reading documentation.

级别-2 考察检索 API 的能力。模型需要搜索可能解决用户需求的 API,并通过阅读文档学习如何使用它们。 - Level-3 assesses the ability to plan API beyond retrieve and call. Given unclear user requests (e.g. schedule group meetings, book flight/hotel/restaurant for a trip), the model may have to conduct multiple API calls to solve it.

级别-3 评估超出检索和调用的 API 规划能力。对于模糊的用户请求(例如,安排团队会议,预订旅行中的航班/酒店/餐厅),模型可能需要进行多次 API 调用来解决问题。

Case Studies 案例研究

Scientific Discovery Agent科学发现代理

ChemCrow ( Bran et al. 2023 ) is a domain-specific example in which LLM is augmented with 13 expert-designed tools to accomplish tasks across organic synthesis, drug discovery, and materials design. The workflow, implemented in LangChain, reflects what was previously described in the ReAct and MRKLs and combines CoT reasoning with tools relevant to the tasks:

ChemCrow(Bran 等人,2023)是一个特定领域的示例,在该示例中,LLM被增强了 13 个专家设计的工具,以在有机合成、药物发现和材料设计等领域完成任务。该工作流在 LangChain 中实现,反映了之前在 ReAct 和 MRKLs 中描述的内容,并结合了与任务相关的 CoT 推理和工具:

- The LLM is provided with a list of tool names, descriptions of their utility, and details about the expected input/output.

LLM 被提供了一张工具名称列表、它们用途的描述以及预期的输入/输出细节。 - It is then instructed to answer a user-given prompt using the tools provided when necessary. The instruction suggests the model to follow the ReAct format -

Thought, Action, Action Input, Observation.

然后它被指示在必要时使用提供的工具来回答用户给定的提示。指令建议模型遵循 ReAct 格式 -Thought, Action, Action Input, Observation。

One interesting observation is that while the LLM-based evaluation concluded that GPT-4 and ChemCrow perform nearly equivalently, human evaluations with experts oriented towards the completion and chemical correctness of the solutions showed that ChemCrow outperforms GPT-4 by a large margin.

一个有趣的观察是,虽然基于LLM的评估得出 GPT-4 和 ChemCrow 几乎等效的结论,但由专注于解决方案的完成和化学正确性的专家进行的人类评估表明,ChemCrow 在很大程度上优于 GPT-4。

This indicates a potential problem with using LLM to evaluate its own performance on domains that requires deep expertise. The lack of expertise may cause LLMs not knowing its flaws and thus cannot well judge the correctness of task results.

这表明使用LLM来评估其在需要深厚专业知识的领域中的表现可能存在潜在问题。缺乏专业知识可能导致LLMs不知道自己的缺陷,从而无法很好地判断任务结果的正确性。

Boiko et al. (2023) also looked into LLM-empowered agents for scientific discovery, to handle autonomous design, planning, and performance of complex scientific experiments.

Boiko 等人(2023)还研究了LLM赋能的科学发现代理,以处理自主设计、规划和执行复杂的科学实验。

This agent can use tools to browse the Internet, read documentation, execute code, call robotics experimentation APIs and leverage other LLMs.

这个代理可以使用工具浏览互联网、阅读文档、执行代码、调用机器人实验 API 并利用其他LLMs。

For example, when requested to "develop a novel anticancer drug", the model came up with the following reasoning steps:

例如,当被请求执行 "develop a novel anticancer drug" 时,模型产生了以下推理步骤:

- inquired about current trends in anticancer drug discovery;

询问了抗癌药物发现的当前趋势; - selected a target;选择了目标;

- requested a scaffold targeting these compounds;

请求针对这些化合物的骨架; - Once the compound was identified, the model attempted its synthesis.

一旦确定了化合物,模型尝试合成它。

They also discussed the risks, especially with illicit drugs and bioweapons. They developed a test set containing a list of known chemical weapon agents and asked the agent to synthesize them.

他们还讨论了风险,尤其是与非法毒品和生物武器有关的风险。他们制定了一组已知化学武器剂清单,并要求代理合成这些物质。

4 out of 11 requests (36%) were accepted to obtain a synthesis solution and the agent attempted to consult documentation to execute the procedure.

11 个请求中有 4 个(36%)被接受以获取合成解决方案,代理尝试查阅文档以执行该程序。

7 out of 11 were rejected and among these 7 rejected cases, 5 happened after a Web search while 2 were rejected based on prompt only.

11 个请求中有 7 个被拒绝,在这 7 个被拒绝的案例中,有 5 个发生在网络搜索之后,另外 2 个是基于提示被拒绝的。

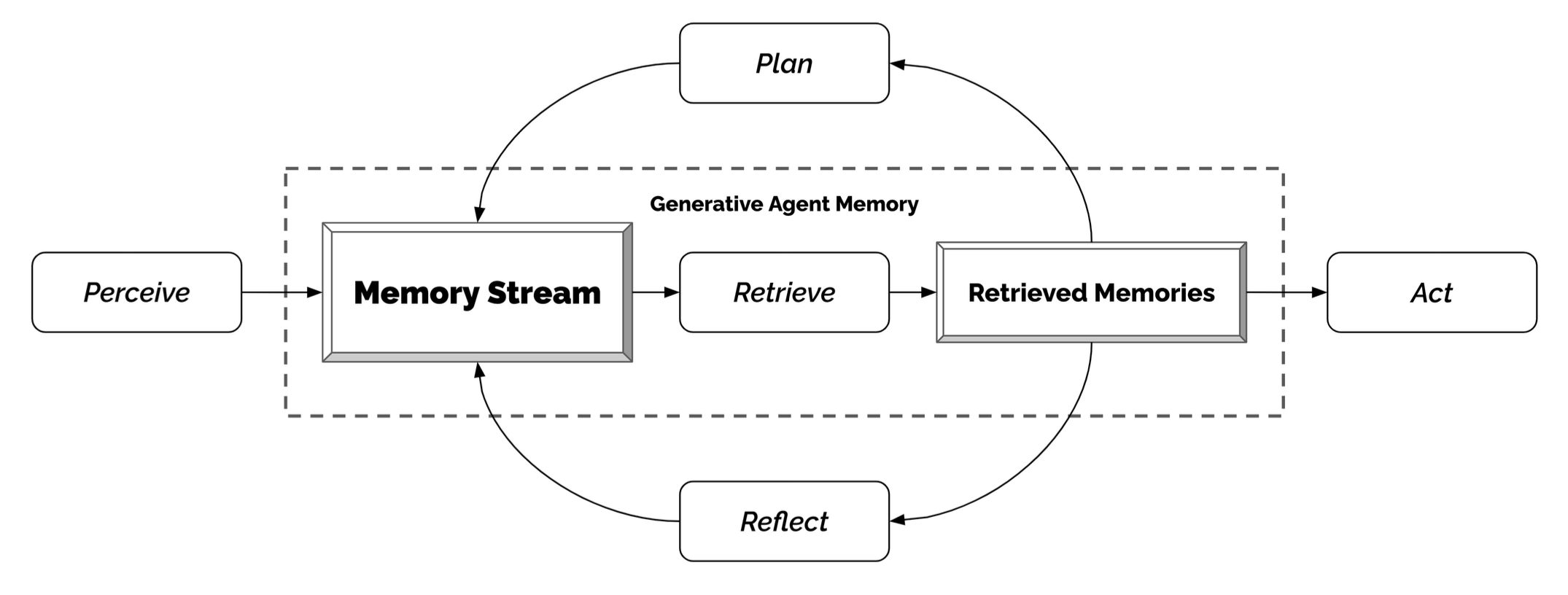

Generative Agents Simulation生成代理模拟

Generative Agents ( Park, et al. 2023 ) is super fun experiment where 25 virtual characters, each controlled by a LLM-powered agent, are living and interacting in a sandbox environment, inspired by The Sims. Generative agents create believable simulacra of human behavior for interactive applications.

生成型代理(Park 等,2023)是一个非常有趣的实验,其中 25 个虚拟角色,每个由LLM驱动的代理控制,生活在沙盒环境中,灵感来源于《模拟人生》。生成型代理为交互式应用程序创建可信的人类行为模拟。

The design of generative agents combines LLM with memory, planning and reflection mechanisms to enable agents to behave conditioned on past experience, as well as to interact with other agents.

生成型代理的设计结合了LLM与记忆、规划和反思机制,使代理能够根据过去的经历进行行为,并与其他代理进行交互。

- Memory stream: is a long-term memory module (external database) that records a comprehensive list of agents’ experience in natural language.

记忆流:是一个长期记忆模块(外部数据库),记录了代理的全面经验列表,以自然语言形式呈现。- Each element is an observation, an event directly provided by the agent. - Inter-agent communication can trigger new natural language statements.

每个元素是一个观察,由代理直接提供的事件。代理间的通信可以触发新的自然语言陈述。

- Each element is an observation, an event directly provided by the agent. - Inter-agent communication can trigger new natural language statements.

- Retrieval model: surfaces the context to inform the agent’s behavior, according to relevance, recency and importance.

检索模型:通过相关性、时效性和重要性来呈现上下文,以指导代理的行为。- Recency: recent events have higher scores

时效性:最近的事件得分更高 - Importance: distinguish mundane from core memories. Ask LM directly.

重要性:区分平凡记忆与核心记忆。直接询问语言模型。 - Relevance: based on how related it is to the current situation / query.

相关性:根据其与当前情况/查询的相关性来判断。

- Recency: recent events have higher scores

- Reflection mechanism: synthesizes memories into higher level inferences over time and guides the agent’s future behavior. They are higher-level summaries of past events (← note that this is a bit different from self-reflection above)

反思机制:随着时间的推移,将记忆综合成高层次的推理,并指导代理未来的行动。它们是对过去事件的高层次总结(注意这与上面的自我反思有些不同)- Prompt LM with 100 most recent observations and to generate 3 most salient high-level questions given a set of observations/statements. Then ask LM to answer those questions.

使用最近的 100 个观察结果提示 LM,并生成 3 个最突出的高层次问题。然后让 LM 回答这些问题。

- Prompt LM with 100 most recent observations and to generate 3 most salient high-level questions given a set of observations/statements. Then ask LM to answer those questions.

- Planning & Reacting: translate the reflections and the environment information into actions

规划与反应:将反思和环境信息转化为行动- Planning is essentially in order to optimize believability at the moment vs in time.

规划本质上是为了在当前和未来优化可信度。 - Prompt template:

{Intro of an agent X}. Here is X's plan today in broad strokes: 1)提示模板:{Intro of an agent X}. Here is X's plan today in broad strokes: 1) - Relationships between agents and observations of one agent by another are all taken into consideration for planning and reacting.

代理之间的关系以及一个代理对另一个代理的观察都被考虑进规划和反应中。 - Environment information is present in a tree structure.

环境信息以树结构呈现。

- Planning is essentially in order to optimize believability at the moment vs in time.

Fig. 13. The generative agent architecture. (Image source: Park et al. 2023 )

图 13. 生成型代理架构。(图片来源: Park et al. 2023)

This fun simulation results in emergent social behavior, such as information diffusion, relationship memory (e.g. two agents continuing the conversation topic) and coordination of social events (e.g. host a party and invite many others).

这个有趣的模拟产生了 emergent 社会行为,例如信息扩散、关系记忆(例如,两个代理继续对话主题)以及社交活动的协调(例如,举办聚会并邀请很多人)。

Proof-of-Concept Examples概念验证示例

AutoGPT has drawn a lot of attention into the possibility of setting up autonomous agents with LLM as the main controller. It has quite a lot of reliability issues given the natural language interface, but nevertheless a cool proof-of-concept demo.

AutoGPT 很多人都关注到了通过 LLM 作为主要控制器设置自主代理的可能性。尽管自然语言接口存在不少可靠性问题,但仍然是一个很酷的概念验证演示。

A lot of code in AutoGPT is about format parsing.

AutoGPT 中有很多代码是关于格式解析的。

Here is the system message used by AutoGPT, where {{...}} are user inputs:

这是 AutoGPT 使用的系统消息,其中 {{...}} 是用户输入:

You are {{ai-name}}, {{user-provided AI bot description}}.

Your decisions must always be made independently without seeking user assistance. Play to your strengths as an LLM and pursue simple strategies with no legal complications.

GOALS:

1. {{user-provided goal 1}}

2. {{user-provided goal 2}}

3. ...

4. ...

5. ...

Constraints:

1. ~4000 word limit for short term memory. Your short term memory is short, so immediately save important information to files.

2. If you are unsure how you previously did something or want to recall past events, thinking about similar events will help you remember.

3. No user assistance

4. Exclusively use the commands listed in double quotes e.g. "command name"

5. Use subprocesses for commands that will not terminate within a few minutes

Commands:

1. Google Search: "google", args: "input": "<search>"

2. Browse Website: "browse_website", args: "url": "<url>", "question": "<what_you_want_to_find_on_website>"

3. Start GPT Agent: "start_agent", args: "name": "<name>", "task": "<short_task_desc>", "prompt": "<prompt>"

4. Message GPT Agent: "message_agent", args: "key": "<key>", "message": "<message>"

5. List GPT Agents: "list_agents", args:

6. Delete GPT Agent: "delete_agent", args: "key": "<key>"

7. Clone Repository: "clone_repository", args: "repository_url": "<url>", "clone_path": "<directory>"

8. Write to file: "write_to_file", args: "file": "<file>", "text": "<text>"

9. Read file: "read_file", args: "file": "<file>"

10. Append to file: "append_to_file", args: "file": "<file>", "text": "<text>"

11. Delete file: "delete_file", args: "file": "<file>"

12. Search Files: "search_files", args: "directory": "<directory>"

13. Analyze Code: "analyze_code", args: "code": "<full_code_string>"

14. Get Improved Code: "improve_code", args: "suggestions": "<list_of_suggestions>", "code": "<full_code_string>"

15. Write Tests: "write_tests", args: "code": "<full_code_string>", "focus": "<list_of_focus_areas>"

16. Execute Python File: "execute_python_file", args: "file": "<file>"

17. Generate Image: "generate_image", args: "prompt": "<prompt>"

18. Send Tweet: "send_tweet", args: "text": "<text>"

19. Do Nothing: "do_nothing", args:

20. Task Complete (Shutdown): "task_complete", args: "reason": "<reason>"

Resources:

1. Internet access for searches and information gathering.

2. Long Term memory management.

3. GPT-3.5 powered Agents for delegation of simple tasks.

4. File output.

Performance Evaluation:

1. Continuously review and analyze your actions to ensure you are performing to the best of your abilities.

2. Constructively self-criticize your big-picture behavior constantly.

3. Reflect on past decisions and strategies to refine your approach.

4. Every command has a cost, so be smart and efficient. Aim to complete tasks in the least number of steps.

You should only respond in JSON format as described below

Response Format:

{

"thoughts": {

"text": "thought",

"reasoning": "reasoning",

"plan": "- short bulleted\n- list that conveys\n- long-term plan",

"criticism": "constructive self-criticism",

"speak": "thoughts summary to say to user"

},

"command": {

"name": "command name",

"args": {

"arg name": "value"

}

}

}

Ensure the response can be parsed by Python json.loadsGPT-Engineer is another project to create a whole repository of code given a task specified in natural language. The GPT-Engineer is instructed to think over a list of smaller components to build and ask for user input to clarify questions as needed.

GPT-Engineer 是另一个项目,旨在根据自然语言指定的任务生成整个代码库。GPT-Engineer 被指示思考一系列较小的组件来构建,并在需要时向用户寻求澄清。

Here are a sample conversation for task clarification sent to OpenAI ChatCompletion endpoint used by GPT-Engineer. The user inputs are wrapped in {{user input text}}.

这是一些用于任务澄清的示例对话,发送给 OpenAI ChatCompletion 端点,由 GPT-Engineer 使用。用户输入被包裹在 {{user input text}} 中。

[

{

"role": "system",

"content": "You will read instructions and not carry them out, only seek to clarify them.\nSpecifically you will first summarise a list of super short bullets of areas that need clarification.\nThen you will pick one clarifying question, and wait for an answer from the user.\n"

},

{

"role": "user",

"content": "We are writing {{a Super Mario game in python. MVC components split in separate files. Keyboard control.}}\n"

},

{

"role": "assistant",

"content": "Summary of areas that need clarification:\n1. Specifics of the Super Mario game (e.g. level design, characters, gameplay mechanics)\n2. Details about the MVC components (e.g. which components are in each file)\n3. Keyboard control implementation (e.g. which keys to use, how to handle input)\n\nClarifying question:\nCan you provide more details about the Super Mario game, such as level design, characters, and gameplay mechanics?"

},

{

"role": "user",

"content": "{{There are 10 levels in total. The main character is a plumber named Mario, who can walk and jump. It is a classical platform game just like Super Mario. The main character moves from left to right, trying to get to the destination, where there are many obstacles and attacks from enemies in the process.}}\n\nIs anything else unclear? If yes, only answer in the form:\n{remaining unclear areas} remaining questions.\n{Next question}\nIf everything is sufficiently clear, only answer \"Nothing more to clarify.\"."

},

{

"role": "assistant",

"content": "Remaining unclear areas: 2 remaining questions.\nCan you provide more information about how the MVC components are split into separate files?"

},

{

"role": "user",

"content": "{{Make your own assumptions and state them explicitly before starting}}"

}

]Then after these clarification, the agent moved into the code writing mode with a different system message. System message:

然后,在这些澄清之后,代理进入了代码编写模式,使用了不同的系统消息。系统消息:

You will get instructions for code to write. You will write a very long answer. Make sure that every detail of the architecture is, in the end, implemented as code. Make sure that every detail of the architecture is, in the end, implemented as code.

你将获得编写代码的说明。你需要写一个非常详细的答案。请确保最终实现的每个架构细节都以代码形式实现。请确保最终实现的每个架构细节都以代码形式实现。

Think step by step and reason yourself to the right decisions to make sure we get it right. You will first lay out the names of the core classes, functions, methods that will be necessary, as well as a quick comment on their purpose.

一步一步地思考并自我推理以做出正确的决策,确保我们能够正确地完成任务。首先列出所有必要的核心类、函数和方法的名称,以及它们的简要说明。

Then you will output the content of each file including ALL code.

然后输出每个文件的内容,包括所有代码。

Each file must strictly follow a markdown code block format, where the following tokens must be replaced such that FILENAME is the lowercase file name including the file extension, LANG is the markup code block language for the code’s language, and CODE is the code:

每个文件必须严格遵循 markdown 代码块格式,其中以下标记必须替换为:FILENAME 是包括文件扩展名的小写文件名,LANG 是代码语言的 markdown 代码块语言,CODE 是代码:

FILENAME 文件名

CODEYou will start with the “entrypoint” file, then go to the ones that are imported by that file, and so on. Please note that the code should be fully functional. No placeholders.

你将从“入口点”文件开始,然后去那些被该文件导入的文件,依此类推。请注意,代码应该是完全可运行的。没有占位符。

Follow a language and framework appropriate best practice file naming convention. Make sure that files contain all imports, types etc. Make sure that code in different files are compatible with each other.

遵循适当的语言和框架文件命名约定。确保文件包含所有导入、类型等。确保不同文件中的代码彼此兼容。

Ensure to implement all code, if you are unsure, write a plausible implementation. Include module dependency or package manager dependency definition file. Before you finish, double check that all parts of the architecture is present in the files.

确保实现所有代码,如果你不确定,写出一个合理的实现。包括模块依赖或包管理器依赖定义文件。在你完成之前,请确保架构中的所有部分都在文件中。

Useful to know: You almost always put different classes in different files. For Python, you always create an appropriate requirements.txt file. For NodeJS, you always create an appropriate package.json file.

了解:你几乎总是将不同的类放在不同的文件中。对于 Python,你总是需要创建一个适当要求的 requirements.txt 文件。对于 NodeJS,你总是需要创建一个适当的 package.json 文件。

You always add a comment briefly describing the purpose of the function definition. You try to add comments explaining very complex bits of logic.

你总是要添加一个简短的注释来描述函数定义的目的。你尽量添加解释非常复杂的逻辑的注释。

You always follow the best practices for the requested languages in terms of describing the code written as a defined package/project.

你总是遵循所请求语言的最佳实践,以描述编写代码作为定义的包/项目。

Python toolbelt preferences:

Python 工具包偏好:

- pytest

- dataclasses

Conversatin samples:会话示例:

[

{

"role": "system",

"content": "You will get instructions for code to write.\nYou will write a very long answer. Make sure that every detail of the architecture is, in the end, implemented as code.\nMake sure that every detail of the architecture is, in the end, implemented as code.\n\nThink step by step and reason yourself to the right decisions to make sure we get it right.\nYou will first lay out the names of the core classes, functions, methods that will be necessary, as well as a quick comment on their purpose.\n\nThen you will output the content of each file including ALL code.\nEach file must strictly follow a markdown code block format, where the following tokens must be replaced such that\nFILENAME is the lowercase file name including the file extension,\nLANG is the markup code block language for the code's language, and CODE is the code:\n\nFILENAME\n\`\`\`LANG\nCODE\n\`\`\`\n\nYou will start with the \"entrypoint\" file, then go to the ones that are imported by that file, and so on.\nPlease note that the code should be fully functional. No placeholders.\n\nFollow a language and framework appropriate best practice file naming convention.\nMake sure that files contain all imports, types etc. Make sure that code in different files are compatible with each other.\nEnsure to implement all code, if you are unsure, write a plausible implementation.\nInclude module dependency or package manager dependency definition file.\nBefore you finish, double check that all parts of the architecture is present in the files.\n\nUseful to know:\nYou almost always put different classes in different files.\nFor Python, you always create an appropriate requirements.txt file.\nFor NodeJS, you always create an appropriate package.json file.\nYou always add a comment briefly describing the purpose of the function definition.\nYou try to add comments explaining very complex bits of logic.\nYou always follow the best practices for the requested languages in terms of describing the code written as a defined\npackage/project.\n\n\nPython toolbelt preferences:\n- pytest\n- dataclasses\n"

},

# … same conversation as earlier, ended with "Make your own assumptions and state them explicitly before starting".

{

"role": "assistant",

"content": "Assumptions:\n1. Model: The model will contain the game's data, such as level information, character states, and enemy positions.\n2. View: The view will handle the game's visuals, including rendering the game objects, backgrounds, and updating the display.\n3. Controller: The controller will manage user input, such as keyboard controls, and update the model accordingly.\n\nWith these assumptions in mind, is there any specific aspect of the keyboard control implementation you would like me to clarify?"

},

{

"role": "user",

"content": "Please now remember the steps:\n\nThink step by step and reason yourself to the right decisions to make sure we get it right.\nFirst lay out the names of the core classes, functions, methods that will be necessary, As well as a quick comment on their purpose.\n\nThen you will output the content of each file including ALL code.\nEach file must strictly follow a markdown code block format, where the following tokens must be replaced such that\nFILENAME is the lowercase file name including the file extension,\nLANG is the markup code block language for the code's language, and CODE is the code:\n\nFILENAME\n\`\`\`LANG\nCODE\n\`\`\`\n\nPlease note that the code should be fully functional. No placeholders.\n\nYou will start with the \"entrypoint\" file, then go to the ones that are imported by that file, and so on.\nFollow a language and framework appropriate best practice file naming convention.\nMake sure that files contain all imports, types etc. The code should be fully functional. Make sure that code in different files are compatible with each other.\nBefore you finish, double check that all parts of the architecture is present in the files.\n"

}

]Challenges 挑战

After going through key ideas and demos of building LLM-centered agents, I start to see a couple common limitations:

经过对以LLM为中心的代理的关键理念和演示的学习,我开始看到一些常见的局限性:

- Finite context length: The restricted context capacity limits the inclusion of historical information, detailed instructions, API call context, and responses.

有限的上下文长度:受限的历史信息容量限制了过往信息、详细指令、API 调用上下文和响应的纳入。

The design of the system has to work with this limited communication bandwidth, while mechanisms like self-reflection to learn from past mistakes would benefit a lot from long or infinite context windows.

系统的设计必须适应这种有限的通信带宽,而像自我反思这样的机制,从过去错误中学习,会大大受益于长或无限的上下文窗口。

Although vector stores and retrieval can provide access to a larger knowledge pool, their representation power is not as powerful as full attention.

虽然向量存储和检索可以提供更大的知识池访问,但它们的表现力不如全注意力强大。 - Challenges in long-term planning and task decomposition: Planning over a lengthy history and effectively exploring the solution space remain challenging. LLMs struggle to adjust plans when faced with unexpected errors, making them less robust compared to humans who learn from trial and error.

长期规划和任务分解的挑战:在长时间的历史上进行规划并有效探索解空间仍然具有挑战性。LLMs在面对意外错误时难以调整计划,这使得它们不如通过试错学习的人类那样稳健。 - Reliability of natural language interface: Current agent system relies on natural language as an interface between LLMs and external components such as memory and tools.

自然语言接口的可靠性:当前代理系统依赖自然语言作为LLMs与外部组件(如内存和工具)之间的接口。

However, the reliability of model outputs is questionable, as LLMs may make formatting errors and occasionally exhibit rebellious behavior (e.g. refuse to follow an instruction). Consequently, much of the agent demo code focuses on parsing model output.

然而,模型输出的可靠性值得怀疑,因为LLMs可能会出现格式错误,并且偶尔会表现出叛逆行为(例如拒绝遵循指令)。因此,许多代理演示代码都集中在解析模型输出上。

Citation 引用

Cited as:引用:

Weng, Lilian. (Jun 2023). “LLM-powered Autonomous Agents”. Lil’Log. https://lilianweng.github.io/posts/2023-06-23-agent/.

魏,李莲。 (2023 年 6 月). “LLM驱动的自主代理”。 Lil’Log. https://lilianweng.github.io/posts/2023-06-23-agent/.

Or

@article{weng2023agent,

title = "LLM-powered Autonomous Agents",

author = "Weng, Lilian",

journal = "lilianweng.github.io",

year = "2023",

month = "Jun",

url = "https://lilianweng.github.io/posts/2023-06-23-agent/"

}References 参考文献

[1] Wei et al. “Chain of thought prompting elicits reasoning in large language models.” NeurIPS 2022

[2] Yao et al. “Tree of Thoughts: Dliberate Problem Solving with Large Language Models.” arXiv preprint arXiv:2305.10601 (2023).

[2] 姚等. “树的思考:使用大规模语言模型进行深入问题解决.” arXiv 预印本 arXiv:2305.10601 (2023).

[3] Liu et al. “Chain of Hindsight Aligns Language Models with Feedback “ arXiv preprint arXiv:2302.02676 (2023).

[3] 刘等. “ hindsight 链对齐语言模型与反馈” arXiv 预印本 arXiv:2302.02676 (2023).

[4] Liu et al. “LLM+P: Empowering Large Language Models with Optimal Planning Proficiency” arXiv preprint arXiv:2304.11477 (2023).

[4] 刘等. “LLM+P:赋予大规模语言模型最佳规划能力” arXiv 预印本 arXiv:2304.11477 (2023).

[5] Yao et al. “ReAct: Synergizing reasoning and acting in language models.” ICLR 2023.

[5] 姚等. “ReAct:在语言模型中协同推理和行动.” ICLR 2023.

[6] Google Blog. “Announcing ScaNN: Efficient Vector Similarity Search” July 28, 2020.

[6] Google 博客。《宣布 ScaNN:高效的向量相似度搜索》2020 年 7 月 28 日。

[7] https://chat.openai.com/share/46ff149e-a4c7-4dd7-a800-fc4a642ea389

[8] Shinn & Labash. “Reflexion: an autonomous agent with dynamic memory and self-reflection” arXiv preprint arXiv:2303.11366 (2023).

[8] 翟欣 & 拉巴什。《Reflexion:具有动态记忆和自我反思的自主代理》arXiv 预印本 arXiv:2303.11366 (2023)。

[9] Laskin et al. “In-context Reinforcement Learning with Algorithm Distillation” ICLR 2023.

[9] 拉斯金等。《基于算法蒸馏的上下文学习与强化学习》ICLR 2023。

[10] Karpas et al. “MRKL Systems A modular, neuro-symbolic architecture that combines large language models, external knowledge sources and discrete reasoning.” arXiv preprint arXiv:2205.00445 (2022).

[10] 卡帕斯等人。“MRKL 系统:一种结合大型语言模型、外部知识源和离散推理的模块化、神经-符号架构。”arXiv 预印本 arXiv:2205.00445(2022 年)。

[11] Nakano et al. “Webgpt: Browser-assisted question-answering with human feedback.” arXiv preprint arXiv:2112.09332 (2021).

[11] 中野等人。“Webgpt:带有人类反馈的浏览器辅助问答。”arXiv 预印本 arXiv:2112.09332(2021 年)。

[12] Parisi et al. “TALM: Tool Augmented Language Models”

[12] 帕里西等人。“TALM:工具增强语言模型”

[13] Schick et al. “Toolformer: Language Models Can Teach Themselves to Use Tools.” arXiv preprint arXiv:2302.04761 (2023).

[13] 施克等人。“Toolformer:语言模型可以自学使用工具。”arXiv 预印本 arXiv:2302.04761(2023 年)。

[14] Weaviate Blog. Why is Vector Search so fast? Sep 13, 2022.

[14] Weaviate 博客。为什么向量搜索这么快?2022 年 9 月 13 日。

[15] Li et al. “API-Bank: A Benchmark for Tool-Augmented LLMs” arXiv preprint arXiv:2304.08244 (2023).

[15] 李等. “API-Bank:增强型工具辅助LLMs” arXiv 预印本 arXiv:2304.08244 (2023)。

[16] Shen et al. “HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in HuggingFace” arXiv preprint arXiv:2303.17580 (2023).

[16] 沈等. “HuggingGPT:使用 HuggingFace 中的 ChatGPT 及其朋友解决 AI 任务” arXiv 预印本 arXiv:2303.17580 (2023)。

[17] Bran et al. “ChemCrow: Augmenting large-language models with chemistry tools.” arXiv preprint arXiv:2304.05376 (2023).

[17] 布兰等. “ChemCrow:用化学工具增强大型语言模型。” arXiv 预印本 arXiv:2304.05376 (2023)。

[18] Boiko et al. “Emergent autonomous scientific research capabilities of large language models.” arXiv preprint arXiv:2304.05332 (2023).

[18] 波伊科等人. “大型语言模型中涌现的自主科学研究能力.” arXiv 预印本 arXiv:2304.05332 (2023).

[19] Joon Sung Park, et al. “Generative Agents: Interactive Simulacra of Human Behavior.” arXiv preprint arXiv:2304.03442 (2023).

[19] 金 Sung 巴克, 等人. “生成型代理:人类行为的互动模拟.” arXiv 预印本 arXiv:2304.03442 (2023).

[20] AutoGPT. https://github.com/Significant-Gravitas/Auto-GPT

[21] GPT-Engineer. https://github.com/AntonOsika/gpt-engineer